December 18, 2025

[DOWNLOAD] How We Evaluate AI Search for the Agentic Era

Featured resources.

Paying 10x More After Google’s num=100 Change? Migrate to You.com in Under 10 MinutesPaying 10x More After Google’s num=100 Change? Migrate to You.com in Under 10 Minutes

.webp)

Paying 10x More After Google’s num=100 Change? Migrate to You.com in Under 10 Minutes

September 18, 2025

Blog

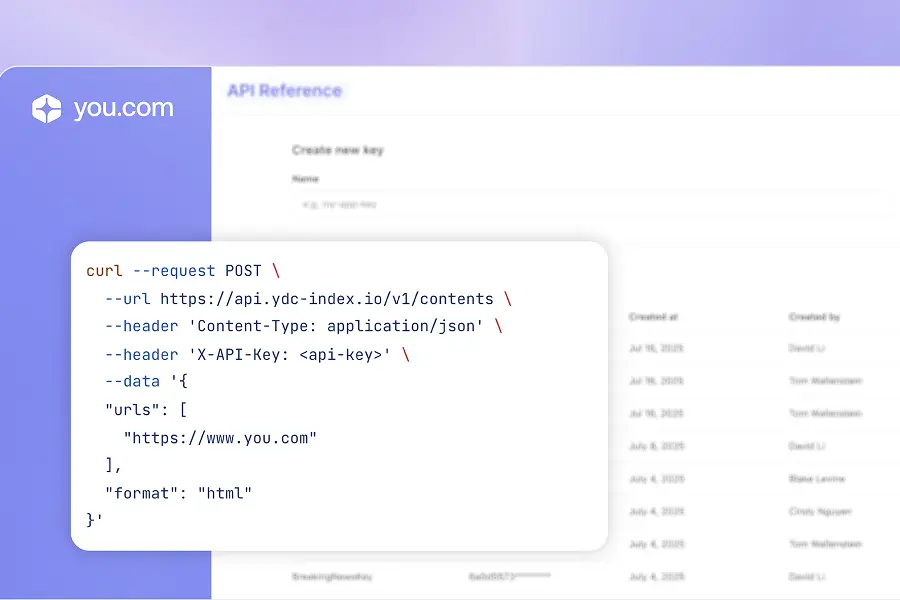

September 2025 API Roundup: Introducing Express & Contents APIsSeptember 2025 API Roundup: Introducing Express & Contents APIs

September 2025 API Roundup: Introducing Express & Contents APIs

September 16, 2025

Blog

You.com vs. Microsoft Copilot: How They Compare for Enterprise TeamsYou.com vs. Microsoft Copilot: How They Compare for Enterprise Teams

You.com vs. Microsoft Copilot: How They Compare for Enterprise Teams

September 10, 2025

Blog

All resources.

Browse our complete collection of tools, guides, and expert insights — helping your team turn AI into ROI.

.png)

Company

Next Big Things In Tech 2024

News & Press