December 18, 2025

[DOWNLOAD] How We Evaluate AI Search for the Agentic Era

Featured resources.

Paying 10x More After Google’s num=100 Change? Migrate to You.com in Under 10 MinutesPaying 10x More After Google’s num=100 Change? Migrate to You.com in Under 10 Minutes

.webp)

Paying 10x More After Google’s num=100 Change? Migrate to You.com in Under 10 Minutes

September 18, 2025

Blog

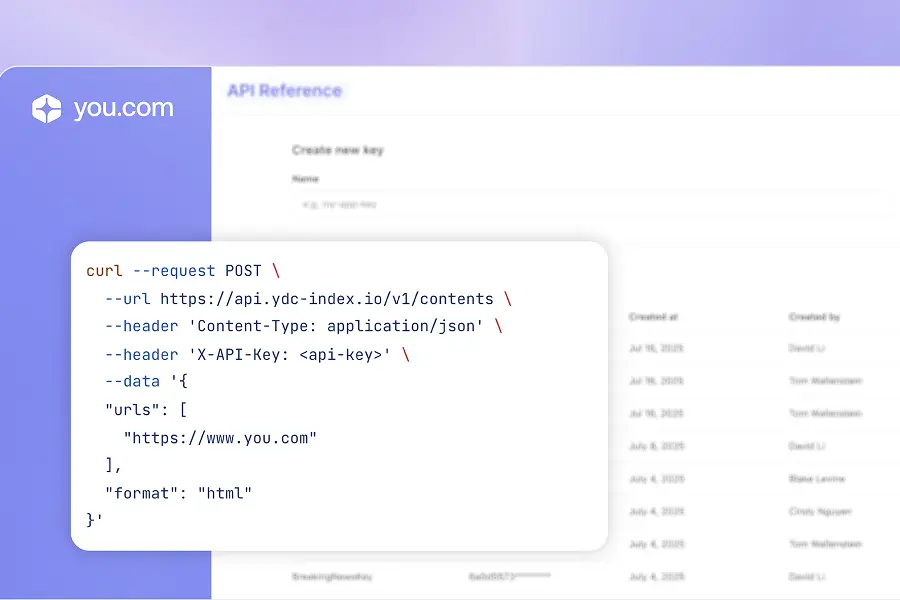

September 2025 API Roundup: Introducing Express & Contents APIsSeptember 2025 API Roundup: Introducing Express & Contents APIs

September 2025 API Roundup: Introducing Express & Contents APIs

September 16, 2025

Blog

You.com vs. Microsoft Copilot: How They Compare for Enterprise TeamsYou.com vs. Microsoft Copilot: How They Compare for Enterprise Teams

You.com vs. Microsoft Copilot: How They Compare for Enterprise Teams

September 10, 2025

Blog

All resources.

Browse our complete collection of tools, guides, and expert insights — helping your team turn AI into ROI.

AI Research Agents & Custom Indexes

What the Heck Are Vertical Search Indexes?

January 20, 2026

Blog

The Agent Loop: How AI Agents Actually Work (and How to Build One)The Agent Loop: How AI Agents Actually Work (and How to Build One)

.jpg)

AI Research Agents & Custom Indexes

The Agent Loop: How AI Agents Actually Work (and How to Build One)

January 16, 2026

Blog

Before Superintelligent AI Can Solve Major Challenges, We Need to Define What 'Solved' MeansBefore Superintelligent AI Can Solve Major Challenges, We Need to Define What 'Solved' Means

.jpg)

AI 101

Before Superintelligent AI Can Solve Major Challenges, We Need to Define What 'Solved' Means

January 14, 2026

News & Press

AI Search Infrastructure: The Foundation for Tomorrow’s Intelligent ApplicationsAI Search Infrastructure: The Foundation for Tomorrow’s Intelligent Applications

AI Search Infrastructure

AI Search Infrastructure: The Foundation for Tomorrow’s Intelligent Applications

January 9, 2026

Blog

How to Evaluate AI Search in the Agentic Era: A Sneak Peek How to Evaluate AI Search in the Agentic Era: A Sneak Peek

Comparisons, Evals & Alternatives

How to Evaluate AI Search in the Agentic Era: A Sneak Peek

January 8, 2026

Blog

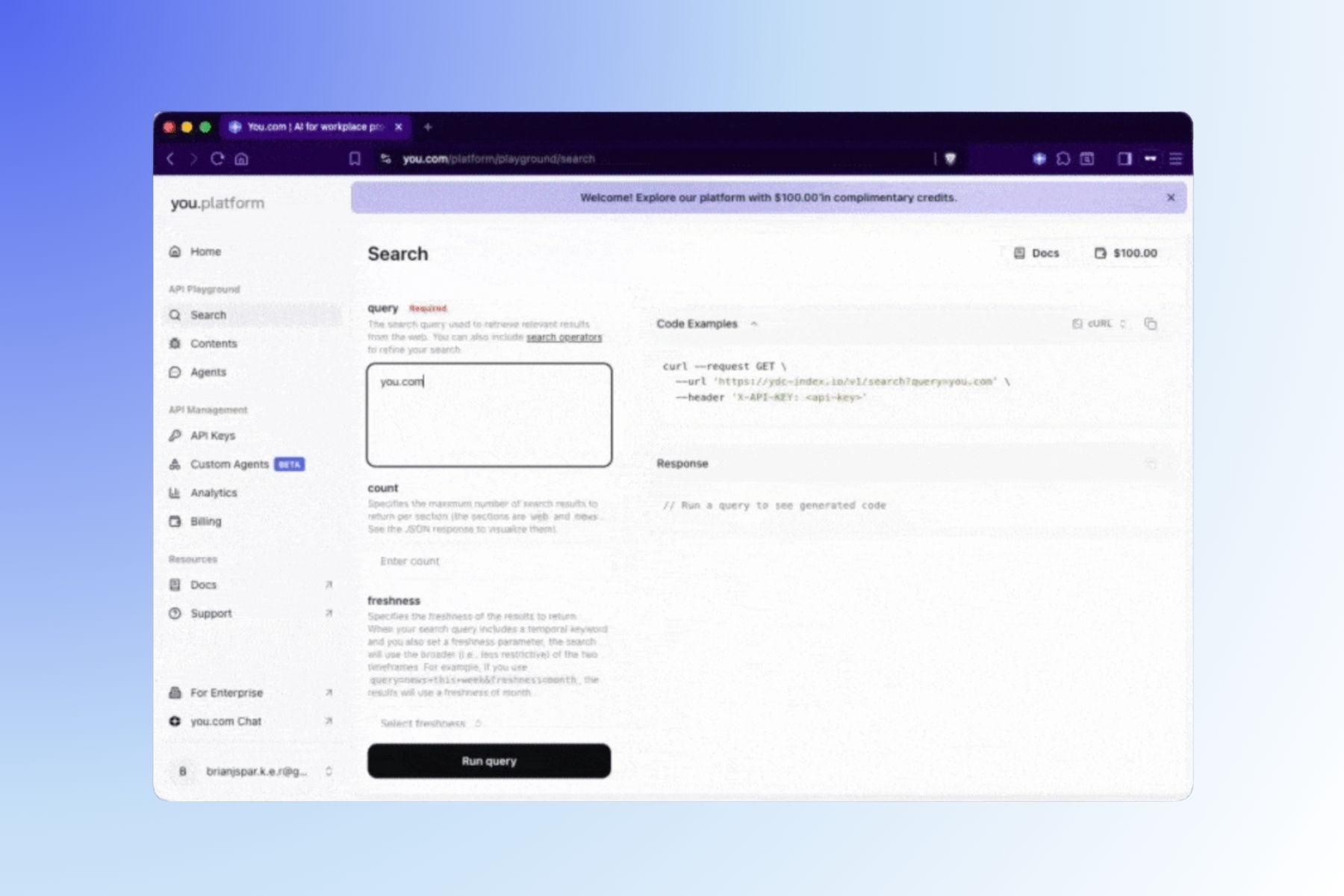

API Management & Evolution

You.com Hackathon Track

January 5, 2026

Guides

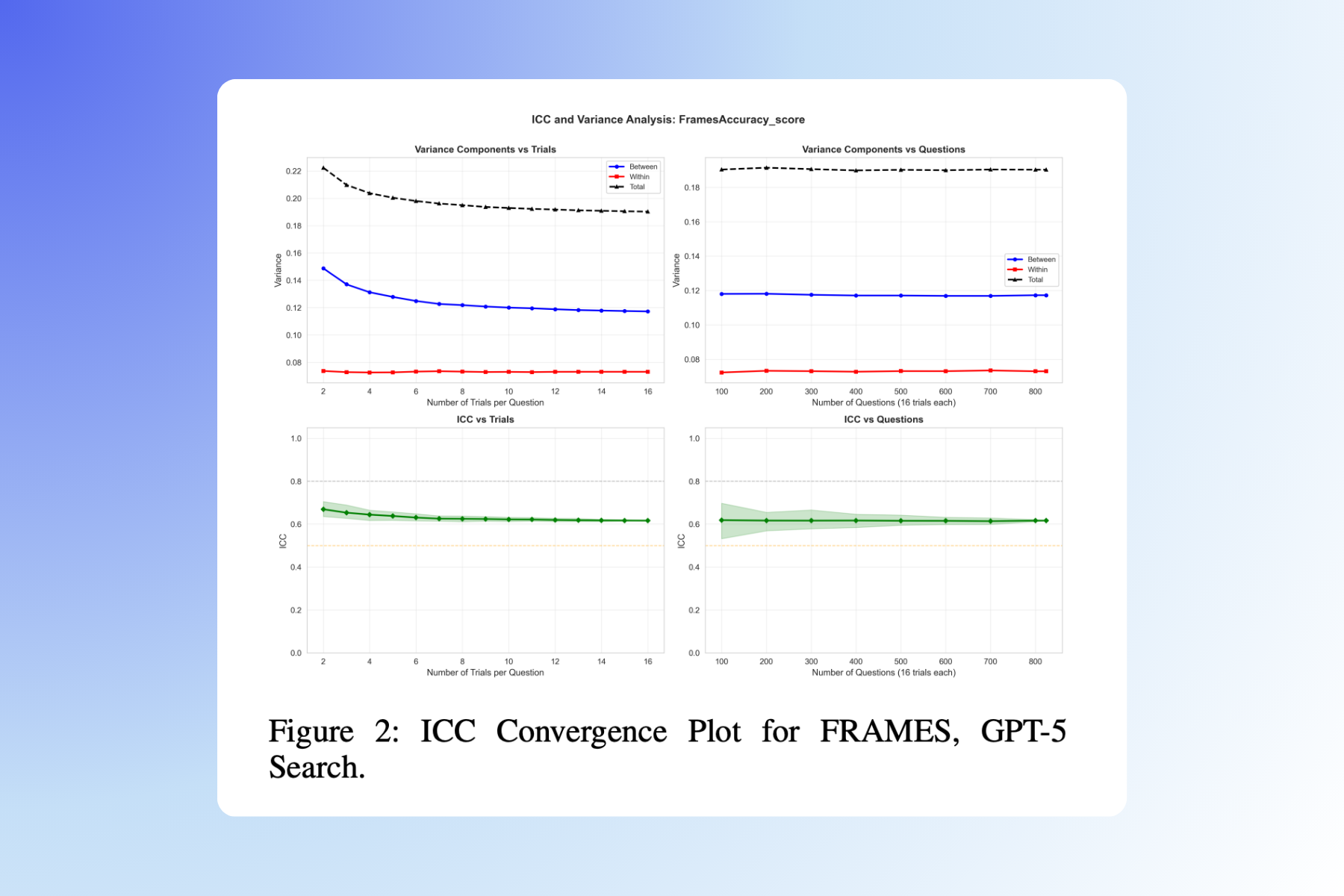

Randomness in AI Benchmarks: What Makes an Eval Trustworthy?Randomness in AI Benchmarks: What Makes an Eval Trustworthy?

Comparisons, Evals & Alternatives

Randomness in AI Benchmarks: What Makes an Eval Trustworthy?

December 18, 2025

Blog

December 2025 API Roundup: Evals, Vertical Index, New Developer Tooling and MoreDecember 2025 API Roundup: Evals, Vertical Index, New Developer Tooling and More

Product Updates

December 2025 API Roundup: Evals, Vertical Index, New Developer Tooling and More

December 16, 2025

Blog